- English

- 中文

This article was published on April 26, 2021 by Alan Chan, co-founder of Heptabase, one month before he started building the early-alpha version of Heptabase.

Foreword

In My Vision: The Context, I mentioned that my vision for the next ten years is “To accelerate the speed of the human’s intellectual and technological progress to the theoretical limit.” To achieve this vision, I want to build a truly universal Open Hyperdocument System that I redesigned. In My Vision: A New City, I mentioned that I want to build on that system the next generation of the Internet that incorporates certain ambition and use it to empower all mankind.

Before I explain the Open Hyperdocument System that I redesigned for the next generation of the internet, in this article, I want to talk about the vision of computer pioneers and thinkers in the 1960s, when personal computers, object-oriented programming, graphical user interface, ethernet, and other technologies haven’t been invented yet.

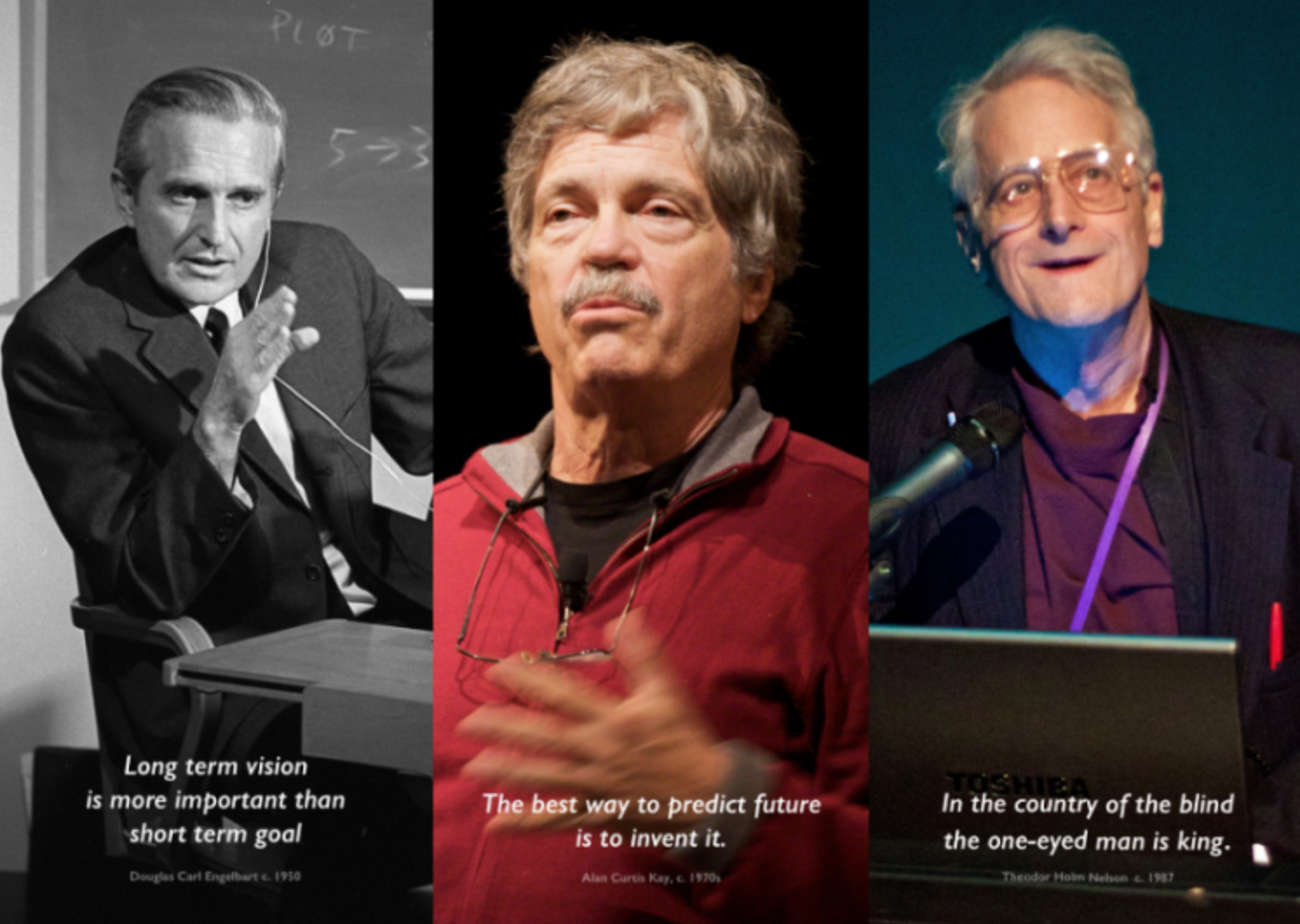

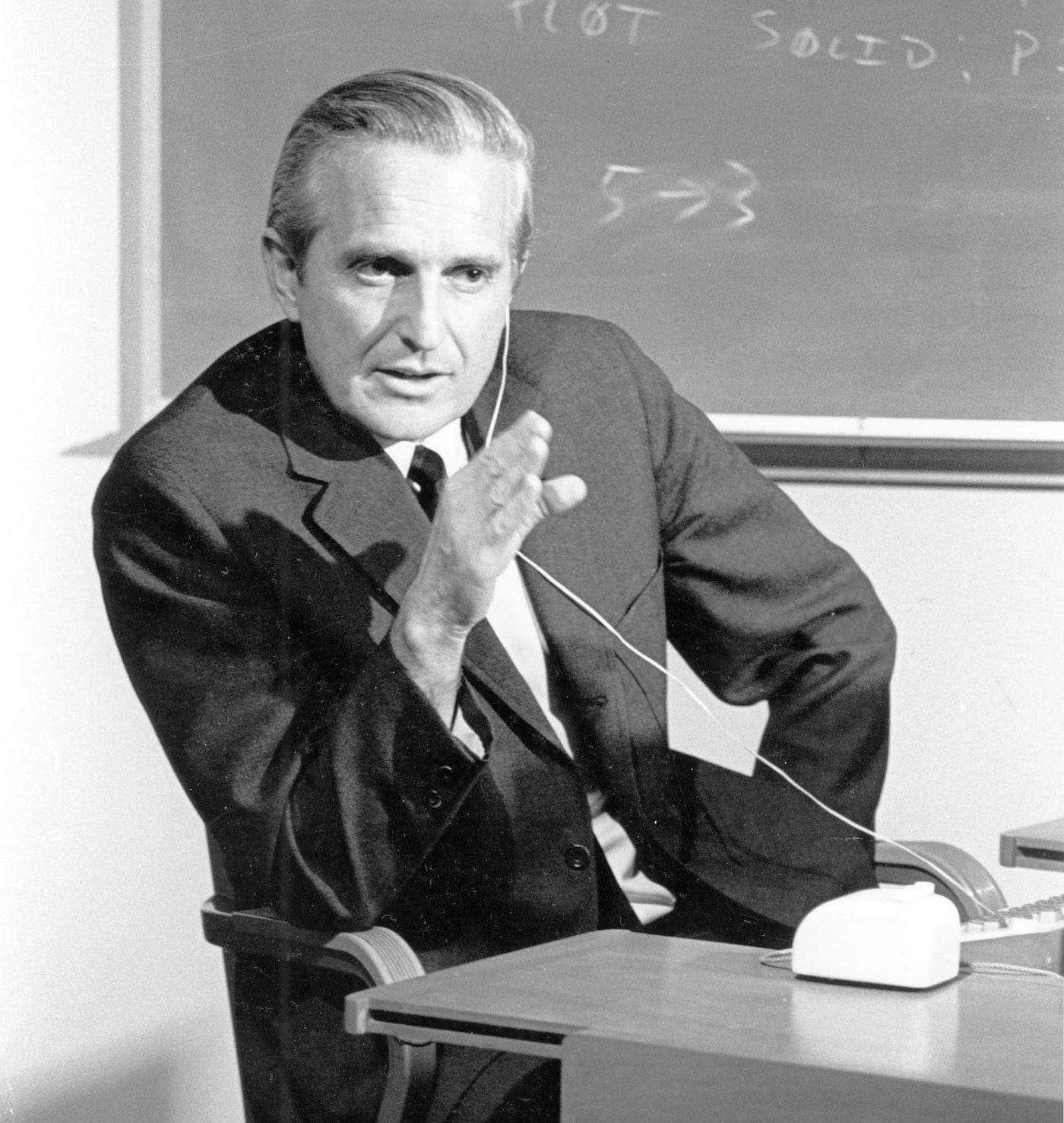

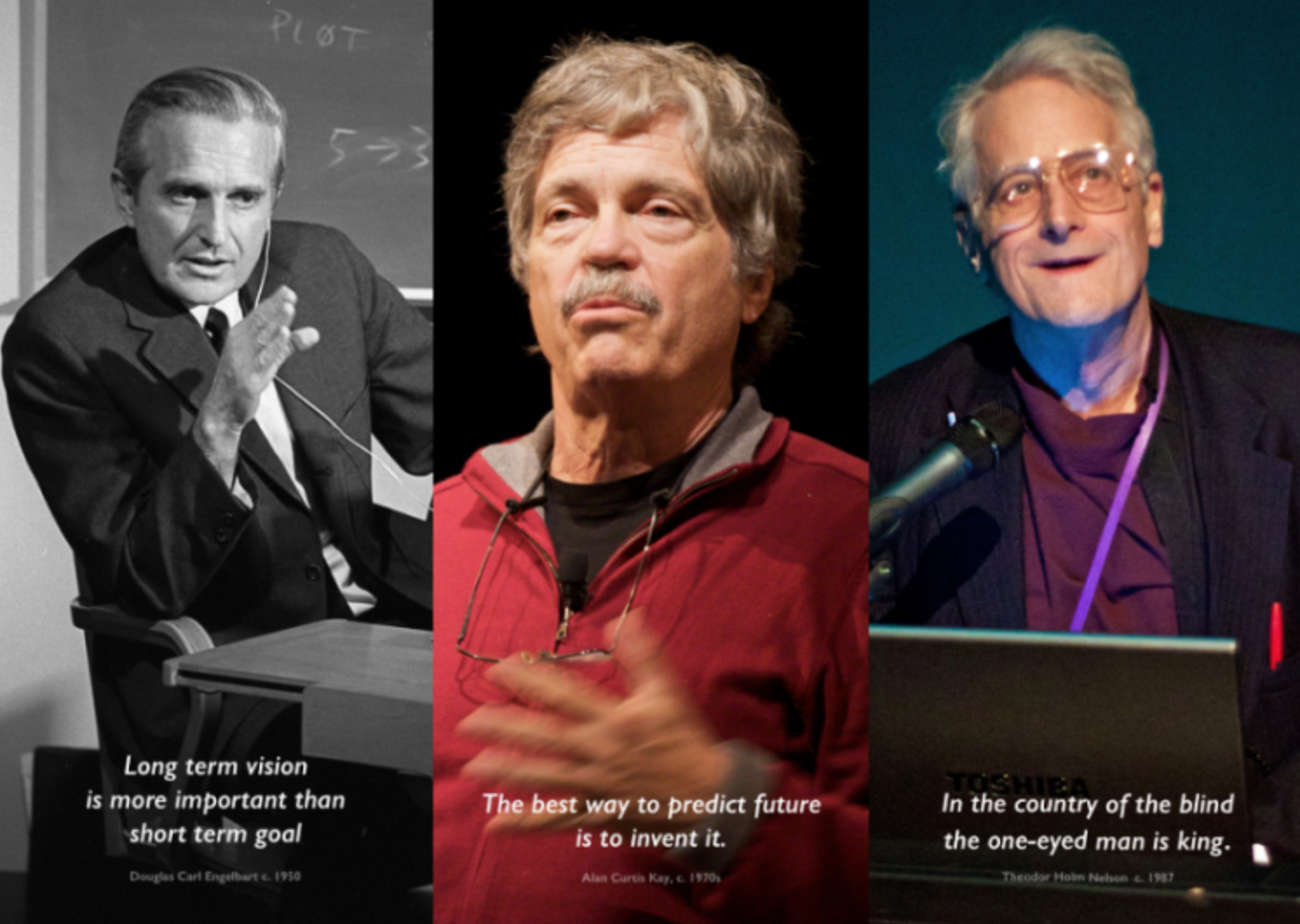

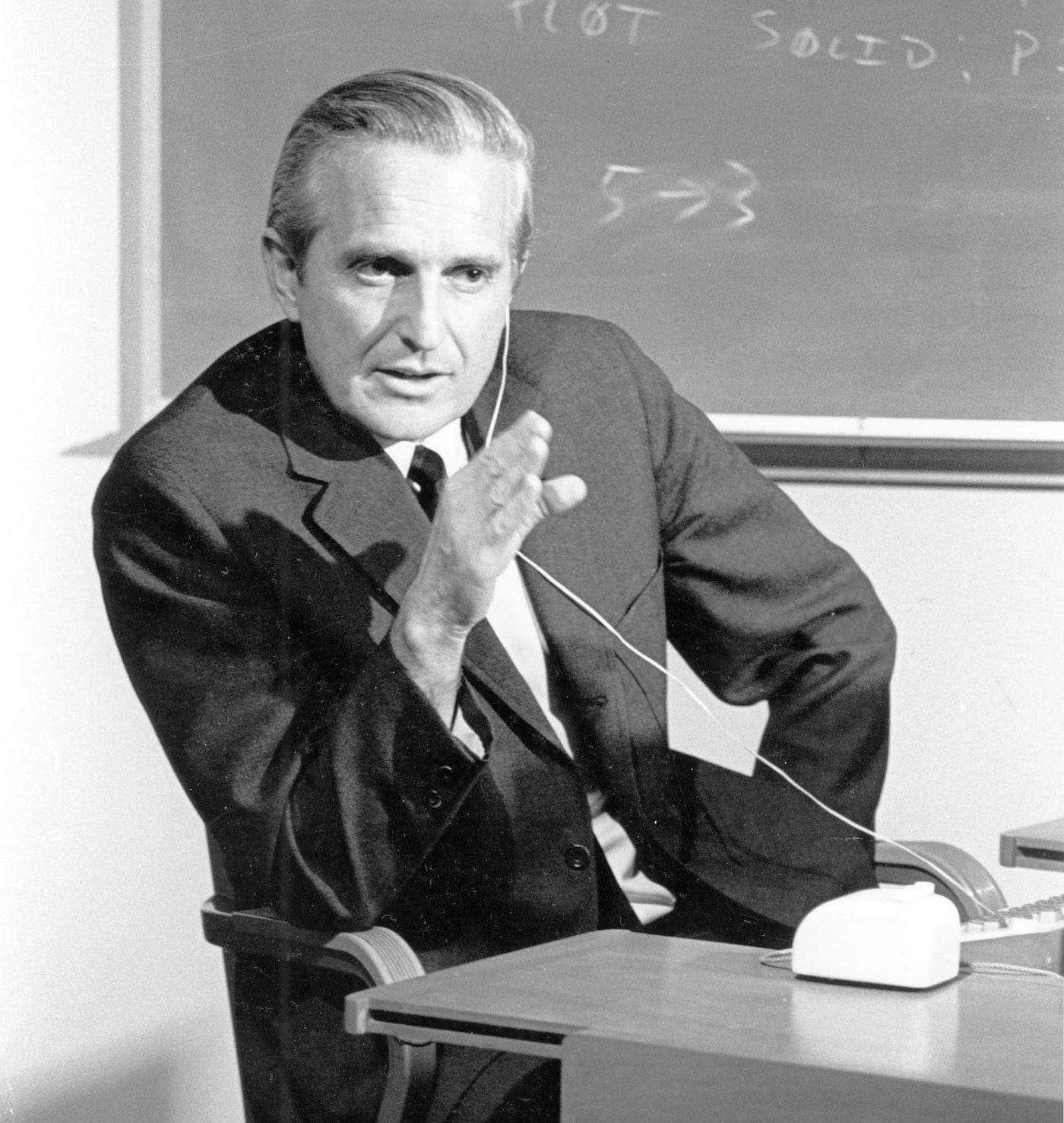

Douglas Engelbart: Augmenting Collective IQ

Douglas Engelbart was an electrical engineer from UC Berkeley. Two years after receiving his Ph.D., he took a position at Stanford Research Institute (SRI) and started his research on “Augment Human Intellect.” The reason he decided to research such a topic can be traced back to the 1950s when young Engelbart had the following findings:

-

All the complex problems in history have been solved by a group of adults working together.

-

The complexity and urgency of the problems facing human society today are increasing at an unprecedented rate.

-

There are limits to what humans can do with the basic capabilities they are born with. Whenever we want to understand complex situations and solve complex problems, we have to introduce other means to augment our basic capabilities. These means fall into four broad categories: artifacts, language, methodologies, and training.

Based on these three findings, Engelbart believed that if we seriously want to solve these problems that are more complex and urgent than ever, we must augment humans’ ability to solve problems collectively, which he defined as collective intelligence. And to augment human collective intelligence, the most effective way is to treat “a group of trained human beings together with their artifacts, language, and methodology” as an entire system and find ways to optimize such a system’s overall efficiency.

In doing this systematic thinking, Engelbart found that whenever the rules of human society (e.g., language, patterns of thought, patterns of behavior, education, ways of doing things, organizational structures, universal values) change, new needs will emerge, and people will create new tools to meet those needs; on the other hand, whenever old tools become obsolete, and new tools become popular, these new tools, in turn, affect the rules of human society. This idea is very similar to what historian Yuval Harari proposed in Homo Deus:

Why did Marx and Lenin succeed where Hong and the Mahdi failed? Not because socialist humanism was philosophically more sophisticated than Islamic and Christian theology, but rather because Marx and Lenin devoted more attention to understanding the technological and economic realities of their time than to perusing ancient texts and prophetic dreams.

Marx and Lenin studied how a steam engine functions, how a coal mine operates, how railroads shape the economy and how electricity influences politics. There can be no communism without electricity, without railroads, without radio. You couldn’t establish a communist regime in sixteenth-century Russia, because communism necessitates the concentration of information and resources in one hub. ‘From each according to his ability, to each according to his needs’ only works when produce can easily be collected and distributed across vast distances, and when activities can be monitored and coordinated over entire countries.

Socialism, which was very up to date a hundred years ago, failed to keep up with the new technology. Leonid Brezhnev and Fidel Castro held on to ideas that Marx and Lenin formulated in the age of steam, and did not understand the power of computers and biotechnology. Liberals, in contrast, adapted far better to the information age. This partly explains why Khrushchev’s 1956 prediction never materialised, and why it was the liberal capitalists who eventually buried the Marxists. If Marx came back to life today, he would probably urge his few remaining disciples to devote less time to reading Das Kapital and more time to studying the Internet and the human genome.

After realizing the co-evolution relationship between humans and tools, Engelbart had a new understanding of the meaning of tools: when we create a tool, we should not only think about what the tool can do but also think about how the tool might affect human society. As a toolmaker with a vision, Engelbart wondered: What kind of tools can we build to help human society evolve new rules, such that the new tools and the new rules can have this synergy that augments the collective intelligence of mankind?

Engelbart finally came up with his answer: We need to create Dynamic Knowledge Repositories (DKRs), a new kind of tool that can integrate and update the latest knowledge held by a group of people and allows others to use it anytime, anywhere. Such tools would not have been possible in the printing era because paper, as a static physical medium, has physical limitations that make it difficult to efficiently update or integrate collective knowledge. In the 1960s, however, computer technology was taking off, and this new technology made it possible to create Dynamic Knowledge Repositories.

Engelbart’s vision and career culminated on December 9, 1968. He and his team at SRI demonstrated the phased prototype they had built: the oNLine System (NLS). It was the first demo of Windows, Hypertext, Graphics, Command Input, Video conferencing, Computer Mouse, Word Processing, Dynamic File Linking, Revision Control, Collaborative Real-Time Editor, etc. This demo shook up the computer world. The NLS system is later considered the earliest prototype of all modern computer technology, and that demo had come to be known as “The Mother of All Demos.”

In 1968, however, the NLS system cost millions of dollars to manufacture, making it impossible to commercialize. On the other hand, the Dynamic Knowledge Repositories Engelbart envisioned were based on the ideas of “computer networks” that could collaborate and connect in real-time. But at a time when centralized thinking was frowned upon, young engineers were suspicious of the idea of letting other people work on their computers through “computer networks.” Many of Engelbart’s core engineers left SRI around 1970 to work on the “personal computer” at Xerox PARC for all of these reasons.

After the Vietnam War, ARPA and NASA reduced their funding to SRI, and the SRI managers were unable to understand Engelbart’s vision at the time. All these reasons led to a decline in Engelbart’s career and his firing in 1976. Without resources and manpower, Engelbart’s talents went untapped for a long time. It took until the 1990s for people to appreciate Engelbart’s contribution to the computer industry.

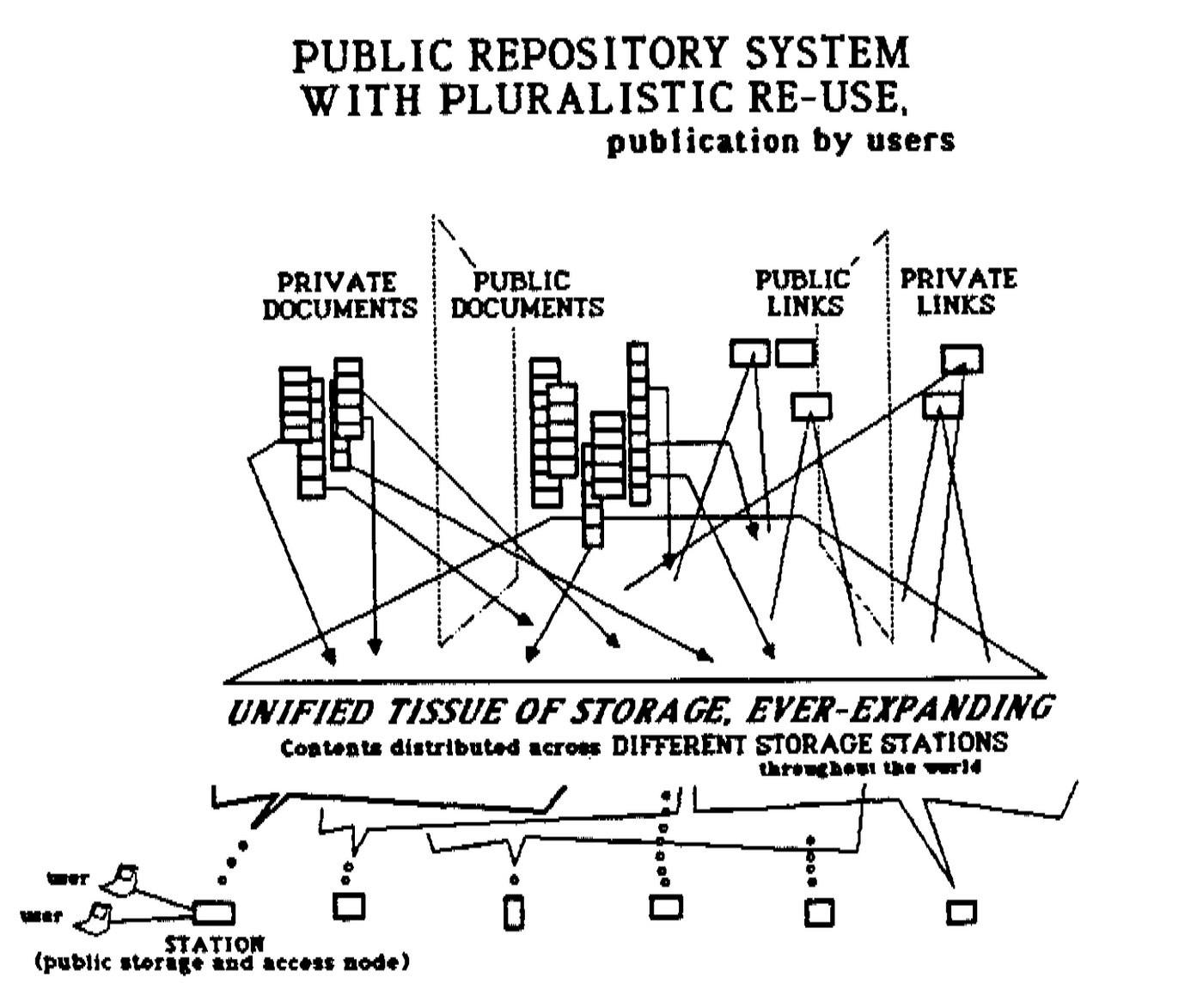

Through the years, Engelbart continued to advocate his vision of augmenting humans’ collective intelligence and proposed the design of the “Open Hyperdocument System.” The Open Hyperdocument System was the culmination of his many years of research, and the NLS was only an early prototype of it. In Engelbart’s vision, there were many Dynamic Knowledge Repositories, large and small, on the Internet. All of them and the tools surrounding them use the same protocols to communicate. However, since the Open Hyperdocument System design was proposed, it still has not been implemented on the Internet as we know it today.

Ted Nelson: Literary Machines

Ted Nelson was raised in a literary family. His father was an Emmy-winning director, and his mother was an Oscar-winning actress. Unlike Engelbart, who came from an engineering background, Nelson majored in philosophy and earned his master’s degree in sociology from Harvard. While studying for his master’s degree, Nelson took a course on machine languages and assemblers, realizing that the computer was not just a mathematical machine, nor was it just an engineering machine, but a universal machine that could do all kinds of things. From then on, he had the idea of using this machine to change the way we think about “writing.”

Nelson has long felt that linear writing is a shackle to the mind. He pointed out that our mind consisted of a network of interconnected ideas. The goal of writing should be to present the reader with the “real structure” of how ideas were connected. However, there are three main problems with linear writing:

First, you have to force these ideas into a particular sequence.

Second, you have to make trade-offs and leave out ideas that can’t fit into the current sequential order.

Third, for different types of readers, the same content should be presented in different narrative structures. Ideal writing should decouple content from the structure. Authors should have a unified “content library,” such that they can use different narrative structures to write articles for different readers, based on the contents in this content library. These articles can be regarded as different versions of the same content, which exist parallel in the same time and space.

To solve the problems with linear writing, Nelson coined the concept of “hypertext,” which refers to “non-sequential text with free user movements.” Nelson pointed out that hypertext had long been a part of our daily lives. A post-it note in a book, an annotation in the corner of a newspaper, a reference at the end of a paper, or a content box inside a magazine page, they’re all hypertext. Now that we have computers, it’s the medium that’s best suited to implementing hypertext in history, and it’s the medium that’s going to disrupt the publishing industry.

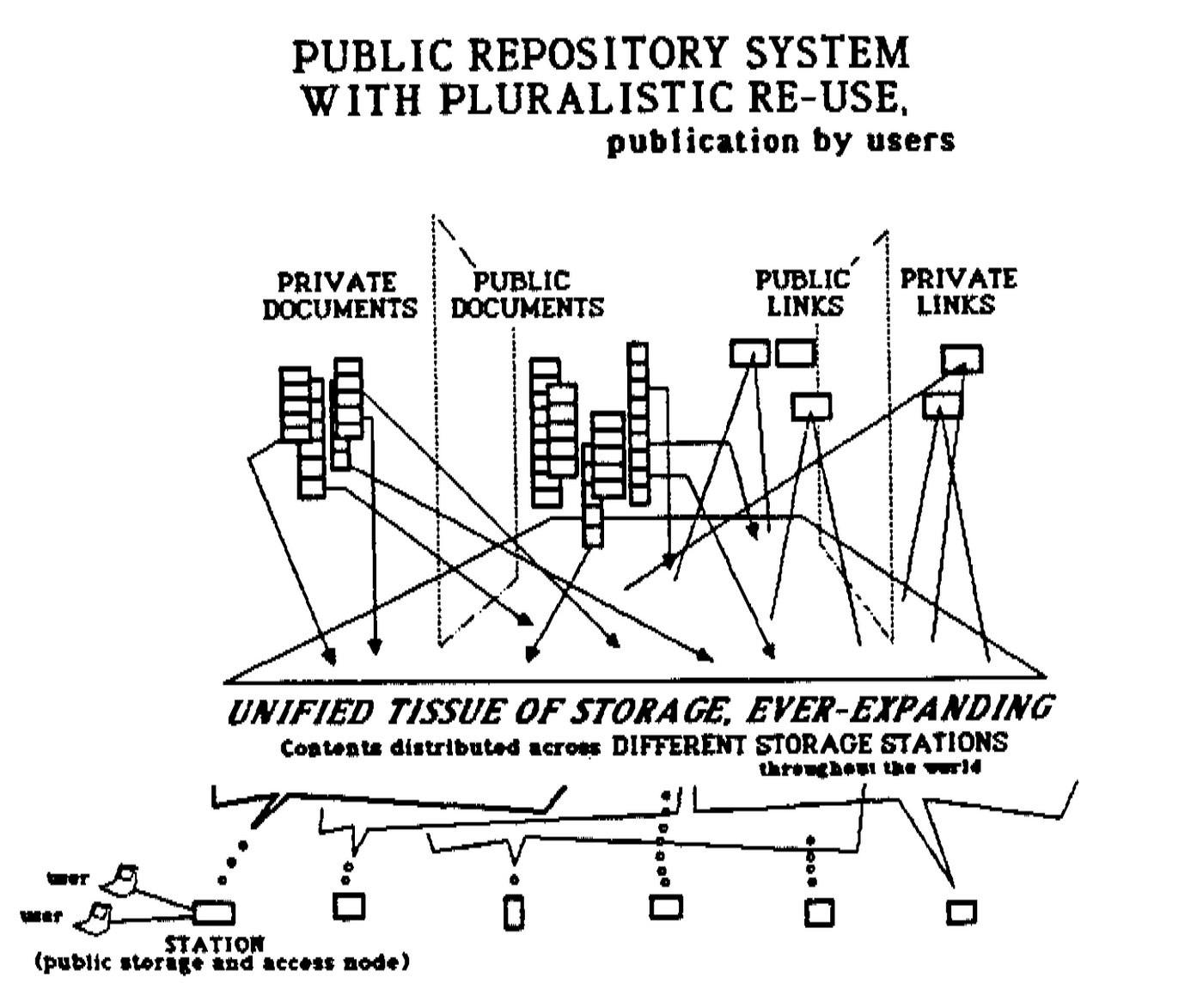

Nelson’s vision was to use computer technology to create a hypertext-based digital repository scheme for world-wide electronic publishing that is open to all humanity and to which anyone can contribute new content. You are free to use any content in this digital repository to create your work, paying only a small fee to its original author. You can edit the same content into different versions and use it in different articles through transclusion. You can even create hyperlinks between contents. What’s more, all of these embedded, hyperlinked relationships are saved bi-directionally. When you look at a piece of content, you can find all the other contents relevant to this piece of content.

To achieve this vision, Ted Nelson started Project Xanadu in the 1960s. But even if Project Xanadu’s team had a deep insight into computers’ potential, their ideas were way ahead of their time, and the technology wasn’t yet there to turn Nelson’s vision into reality. Simultaneously, Project Xanadu had long been criticized for its haphazard design process, poor management skills, and lack of attention to market needs, which resulted in the company never having any products tested in the market. After forty years, Project Xanadu became the longest-lasting Vaporware in the history of technology. The winner in the market was the World Wide Web, designed by Tim Berners-Lee with HTML (Hypertext Markup Language) and HTTP (Hypertext Transfer Protocol).

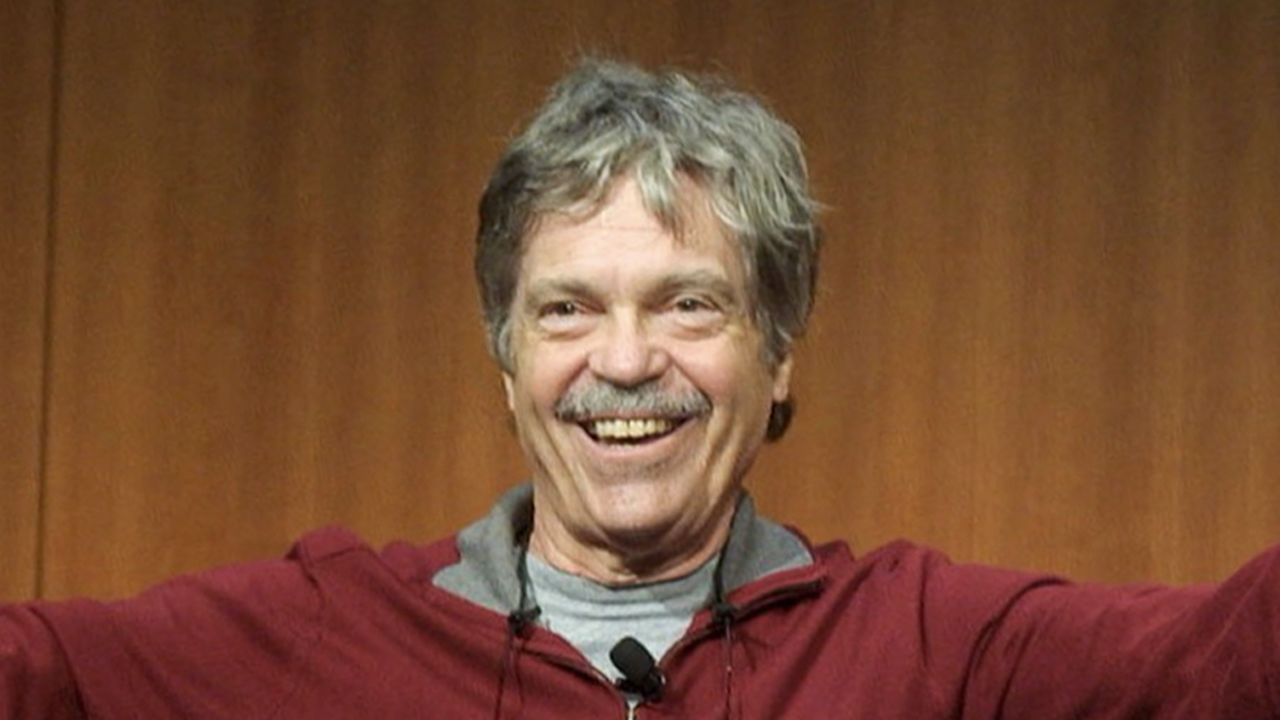

Alan Kay: Dynamic Medium

Alan Kay was raised in a very special family. His father was a physiologist who designed prosthetic limbs, and his mother was a musician. He majored in Molecular Biology with a minor in Mathematics and was also a professional jazz guitarist. After college, Kay received his master’s degree in Electrical Engineering in 1968 and Ph.D. in Computer Science in 1969. In graduate school, he witnessed Douglas Engelbart’s “The Mother of All Demos” and was deeply inspired. In 1970, Kay joined Xerox PARC to build personal computers, which laid the technical foundation for the computer industry’s future development.

For Kay, science, art and technology are not fundamentally different. They are all forms. The only difference between the three is that the ultimate critic of these forms is slightly different. The ultimate critic of science forms is Nature. A science form can only be good if it can help us better understand how Nature works and make the invisible visible. For example, we can’t see electromagnetic waves, but we can study them with mathematical symbols. In contrast, the ultimate critics of art forms are human beings. The quality of an art form depends on the subjective judgment of human beings. We can all interpret the meaning of an art form in our way. Technological forms are special. They are a mixture of science forms and art forms: good technology should conform to the principles of Nature but also help people live a better life.

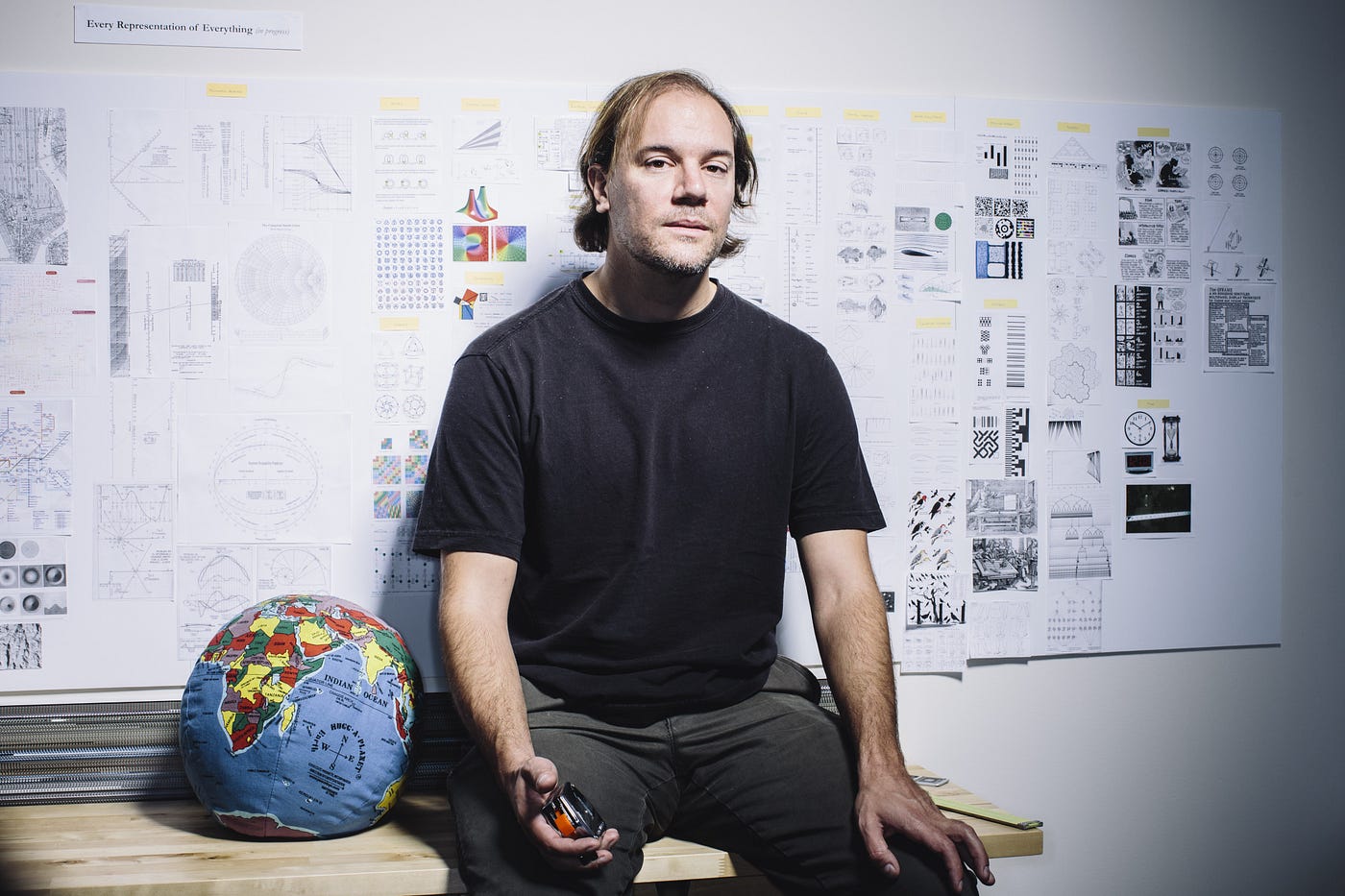

When Kay learned about computers, he realized that computers’ real power was their ability to simulate arbitrary descriptions quickly and instantly. Computers can not only perform calculations; they can also give immediate feedback, which paper can’t do. Such a dynamic nature made Kay believe that computers have the potential to become a “metamedium” of all media. To understand what Alan Kay was thinking, we have to introduce Bret Victor, an expert on modern human-computer interaction.

Victor is a principal investigator at Dynamicland, a human-computer interaction research center formerly known as the CDG Lab (Communications Design Group), which Victor and Kay co-founded in 2014. Victor pointed out that no matter what ideas we want to express, we always need to use certain representations to do so, such as body language, spoken language, words, mathematical symbols, music scores, and visual images. The function of a representation is that it helps us think about things that we cannot think about with other representations. For example, ancient philosophers mainly used “spoken language” and “words” to think about the physic principles behind the world, but what modern scientists are better than ancient philosophers is that we use a new representation called “mathematical symbols” to study physics. A lot of physics theory can only be discovered when we use mathematical symbols. Similarly, Feynman invented a new representation called the Feynman diagram because ordinary mathematical symbols could not clearly express the ideas of the quantum field.

To create great ideas, we need great representations. And to invent great representations, we need a powerful medium. Spoken language is a representation that uses air as its medium; written language is a representation that uses paper as its medium. For a medium to be useful, it has to meet two characteristics. First, useful representations can live on the medium. Second, humans can use their capabilities to create useful representations on the medium. Air is a medium, not only because it can carry sound waves of different frequencies but also because humans can create these sound waves with their vocal cords. Paper is a medium, not only because it can carry written symbols and visual images but also because humans can draw them with their fingers.

The purpose of a medium is to “enable humans to use their capabilities to create representations of ideas,” and whether a medium is good or bad depends on whether the representations it can carry are rich enough and whether the process of humans creating these representations is simple enough. That’s what Kay kept thinking when he saw a computer. The Smalltalk Project, which Kay led at Xerox PARC, aimed to make the computer a medium that anyone in any field could use. The main focus of the Smalltalk team at that time was to build two things:

- A programming language that can be intuitively understood and used by the human brain and effectively interpreted by a computer.

- A set of user interfaces that allow people to effectively interact with computers.

These two things eventually became known as object-oriented programming language and graphical user interfaces (GUIs).

In inventing the object-oriented programming language, the Smalltalk team’s design principle was that anyone could use the language to create useful representations on a computer and clearly define the rules that those representations have to follow. For example, a composer can use the language to write a music application through abstracting concepts such as Note, Melody, Score, and Timbre into different “classes” and defining the attributes and rules of these “classes” (e.g., storing the frequency of a note in a floating-point variable), and the relationship between these “classes” (e.g., a melody is an array of notes).

When we execute the application, the computer entities these “classes” into “objects” that communicate with each other within the application following predefined rules and change state based on the obtained information. The composer can “see” these objects through the graphical user interface and “hear” these objects through the speaker. At this point, the application created by the composer is a new medium that can express musical ideas, and the objects he sees and hears in the application are the representations he invented in this medium. A composer can change a few melodies and immediately hear the new music. By adding a few lines of code, he can visualize music and use his eyes to understand it. He can also immediately listen to what the same score sounds like when played with different instruments.

Representations exist to help us understand a system that we want to understand. In the past, we used words and speech to understand human society, and mathematical symbols and images to understand the universe. Now that object-oriented programming languages have been invented, anyone can use them to invent a new medium on a computer and create different representations on that medium to simulate the system they wish to understand.

Architects can simulate architectural designs in three-dimensional space; doctors can simulate the chemical reaction system of drugs; physicists can simulate hypothetical worlds dominated by certain physical laws; mathematicians can simulate the abstract structure of mathematical formulas; business people can simulate the outcomes of different business decisions; government officials can simulate the effects of new policies on society.

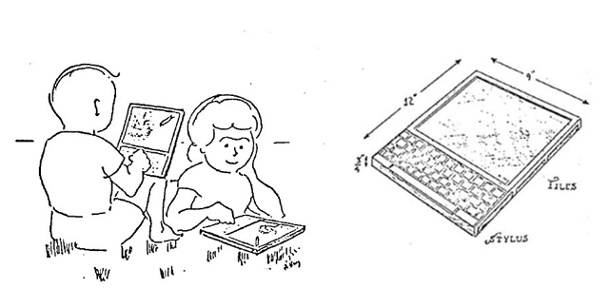

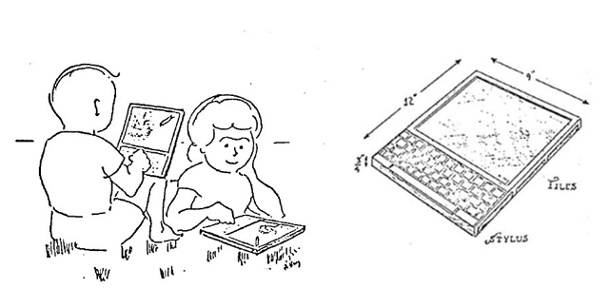

Aside from Smalltalk, Kay’s most widely known computer idea was the Dynabook he drew after meeting Seymour Papert, an MIT mathematician & computer scientist. Papert was heavily influenced by the developmental psychologist Jean Piaget, who argued that “learning by play” was the most powerful and natural way to learn. That’s why he created Logo, a programming language that children can use to learn math concepts. After seeing Papert’s work, Kay was deeply inspired and came up with the idea of Dynabook, where children could use a programming language to make space games and learn physics concepts without realizing it.

Kay and Papert are both building things with the same core idea: that computers will redefine learning. Learning is no longer about passively receiving knowledge but about actively simulating different systems and developing a deeper understanding of the systems you are simulating. Education in the 21st century should allow children to simulate different systems on computers easily, acquire knowledge about these systems, and acquire understandings of this knowledge.

Kay argues that the last time there was a qualitative change in the thinking of human society was during the Printing Revolution. The great works of science, philosophy, and literature were made possible by printing, which allows everyone to think and learn using paper as a medium. Now we have a new medium, the computer, which will lead to another qualitative change. The Computer Revolution will bring us even more powerful ways of thinking and learning compared to Printing Revolution.

Unfulfilled Visions

Douglas Engelbart wanted to use computers to help adults work together and solve difficult problems collectively. Ted Nelson wanted to use computers to help creators create an open and pluralism publishing world. Alan Kay wanted to use computers to help humans create powerful representations to simulate and understand different systems, and then set off the second thought and education revolution in human history. These giants of computing history had big visions in the 1960s, but those visions have yet to be realized due to the constraints of their time.

Let’s start with Alan Kay.

What Alan Kay has been emphasizing, and which is now at the heart of Bret Victor’s design philosophy, is that the computer is a “dynamic medium.” The essence of a computer is not the keyboard, the mouse, and the screen. It’s computational and responsive capabilities that don’t exist in a static medium like paper. We should use this dynamic medium to create dynamic representations that can only exist on this medium, to think about ideas that can only be thought of on this medium.

However, Kay believes that most people in the world are still living in the previous paradigm, and that most people are still using computers to simulate old media and create old representations on them. A PDF is an analog of paper, a movie player is an analog of television, and a music player is an analog of a record player. We use the new medium to do what the old medium can do, sometimes not as well as the old medium. Like the clergy of the 15th century saw the printing press and only wanted to use it to print the Bible, modern people have computers but have not yet unleashed the full potential of this dynamic medium.

On the other hand, Kay has a big complaint about the way modern people use computers. Many people will compare the Apple iPad to Kay’s Dynabook, but the two are entirely different for Kay. The fact that we can make computers into tablets is bound to happen sooner or later under Moore’s law of integrated circuits. The point of the Dynabook was not that it was a tablet, but that it revealed the most important use of computers: to help us think, create and learn. Kay inspired Steve Jobs to turn Apple’s products into “bicycles for the mind.” Yet, for many years, most modern people still use computers as a consumer device.

Let’s move on to Ted Nelson.

Ted Nelson is arguably the most critical person in the World today about the World Wide Web. We can see his comments on our World Wide Web today in Ted Nelson on the Web as Hypertext and Ted Nelson’s Computer Paradigm, Expressed as One-Liners:

Today’s nightmarish new world is controlled by “webmasters”, tekkies unlikely to understand the niceties of text issues and preoccupied with the Web’s exploding alphabet soup of embedded formats. XML is not an improvement but a hierarchy hamburger. Everything, everything must be forced into hierarchical templates! And the “semantic web” means that tekkie committees will decide the world’s true concepts for once and for all.

HTML is precisely what we were trying to PREVENT — ever-breaking links, links going outward only, quotes you can’t follow to their origins, no version management, no rights management.

Markup must not be embedded. Hierarchies and files must not be part of the mental structure of documents. Links must go both ways. All these fundamental errors of the Web must be repaired. But the geeks have tried to lock the door behind them to make nothing else possible.

Even if Nelson hates the World Wide Web, we have to admit that this is the world we live in. Huge ecosystems have been built on such networks, and it is extremely difficult to correct these “fundamental errors” from the bottom. Alan Kay commented on Ted Nelson’s career in Intertwingled Festival:

An ancient proverb says that, in the country of the blind, the one-eyed man is king. Robert Heinlein’s version of this proverb is that, in the country of the blind, the one-eyed man is in for one hell of a rough time! My version is that, in the country of blind, the one-eyed people run things and the two-eyed people are in for one hell of a rough time. That said, we owe much of civilization to the insights and suffering of the tiny number of two-eyed people. Ted Nelson was one of those few two-eyed people. We owe much to him, and this is being celebrated today.

A two-eyed person — Ted Nelson — comes up with a glorious symphony of how life will be so much deeper and richer if we just did X, but the regular world acts as a low-pass filter on the ideas. In the end, he is lucky to get a dial tone. The blind won’t see it, and the one-eyed people will only catch a glimpse, but all of them think their sense or glimpse of the elephant is the whole thing. In our day and age, if they think money can be made from their glimpse, something will happen. They want to sell to the mass market of the blind so they will narrow the glimpse down even more. They could be educators and help the blind learn how to see; this is what science has done for the entire human race. But learning to see is a chore, so most, especially marketing people, are not interested. This is too bad, especially when we consider the efforts the two-eyed people like Ted have to go through to even have a glimpse happen. One of the keys is for the two-eyed people to turn into evangelists. Both Ted and our mutual hero, Douglas Engelbart, worked tirelessly over their lifetimes to point out that, in this dial-tone world, the emperor not only has no clothes but his cell phone can’t transmit real music.

Finally, Douglas Engelbart.

Engelbart died in 2013. Many of us in 2020 have never heard of Engelbart. Many people who know Engelbart think of him as “the inventor of the mouse” and “an early contributor to computer technology.” However, as Alan Kay pointed out, Douglas Engelbart and Ted Nelson were both binocular. If we blind and one-eyed people are to understand the true contribution of binocular people to the world, we have to put aside our preoccupations.

Engelbart had a vision, and our world is still a long way from that vision. The best way to understand how far the vision is is to look at what other computer giants have to say about Engelbart’s vision and the current state of computer technology.

On the day Engelbart died, Bret Victor published an article called A few words on Doug Engelbart, in which he wrote:

Engelbart’s vision, from the beginning, was collaborative. His vision was people working together in a shared intellectual space. His entire system was designed around that intent.

Our computers are fundamentally designed with a single-user assumption through-and-through, and simply mirroring a display remotely doesn’t magically transform them into collaborative environments.

If you attempt to make sense of Engelbart’s design by drawing correspondences to our present-day systems, you will miss the point, because our present-day systems do not embody Engelbart’s intent. Engelbart hated our present-day systems.

Alan Kay made many similar points in his How to Invent the Future lecture and in an interview with FastCompany magazine:

The whole impetus behind the ARPA research and inventions of these things, and particularly people like Engelbart, was to try to invent new tools and new media for humanity to get itself out of its problems. Engelbart, for example, said, “Almost everything important that has a consequence in the adult world is done by adults working together.” This is why his system was collaborative. Here’s an interesting thing. Here’s a Mac. Some people have Linux, some people have Windows on it. Not a single one of the main operating systems today has built into it the thing that Engelbart showed in 1968, which is the intrinsic thing to the ability to share any content that you’re looking at with anybody else, to the point of allowing them to interact with it and to talk back and forth.

All three operating systems we have today that are the main ones are old, old ideas. They don’t even get to where PARC was on the notion of how processes can coordinate with each other. There are many, many other of these things. Because these operating systems are rather similar to each other and because they’re pervasive, unless you use your reality kit, you’re going to think that they’re normal and therefore that’s the way things should be.

You have Tim Berners-Lee, the inventor of the World Wide Web who was a physicist, who knew he would be thrown out of physics if he didn’t know what Newton did. He didn’t check to find out that there was a Douglas Engelbart.

And so, his conception of the World Wide Web was infinitely tinier and weaker and terrible. His thing was simple enough with other unsophisticated people to wind up becoming a de facto standard, which we’re still suffering from. You know, HTML is terrible and most people can’t see it.

At least give us what Engelbart did, for Christ’s sake.

Finally, the best summary of Engelbart, I think, was Ted Nelson’s eulogy in 2013 at a memorial service honoring Engelbart:

The real ashes to be mourned are the ashes of Doug’s great dreams and vision, that we dance around in the costume party of fonts that swept aside his ideas of structure and collaboration.

Don’t get me wrong, the people who gave us all those fonts were idealists too, in their way — they just didn’t necessarily hold a very high view of human potential.

I used to have a high view of human potential. But no one ever had such a soaring view of human potential as Douglas Carl Engelbart — and he gave us wings to soar with him, though his mind flew on ahead, where few could see.

Like Icarus, he tried to fly too far too fast, and the wings melted off. The melt-off began after the Great Demo of 1968. His team dispersed to seek fortunes elsewhere; and he was subordinated to an artificial intelligence department, where his real intelligence was stifled.

All too soon the Augmentation Research Center was gone, fobbed off on an aircraft company.

He was cast out for the next 30 years into the endless spiral of what they call in Hollywood “Development Hell” — trying to find backing.

Let us never forget that Doug Engelbart was dumped by ARPA, Doug Engelbart was dumped by SRI, Doug Engelbart was snubbed by Xerox PARC, and for the rest of his working life he had no chance to take us further.

But for Doug that great demo was only the beginning.

That great demo which defined the corners of our world was only Square One of his endless new checkerboard– the great playing field, the great workplace of sharing, cooperation and understanding he sought to create, and (alas) that only he could imagine.

Just as we can only guess what John Kennedy might have done, we can only guess what Doug Engelbart might have done had he not been cut down in his prime.

Perhaps the Dynamic Knowledge Repository he imagined — the D.K.R. — would not be feasible in a real-world corporation.

Perhaps his notion of accelerating collaboration and cooperation was a pipe dream in this dirty world of organizational politics, jockeying and backstabbing and euphemizing evil.

Of course he was naïve!

Gandhi and Martin Luther King pretended to be naïve, but Doug was the real thing– a luminous innocent, able to do in all innocence what sophisticates could not, would not, dare.

But that naiveté accomplished a dazzling amount in those few years of his Augmentation Research Center, even as the knives were being sharpened for him.

Did he actually have any more great inventions under that halo?

We’ll never know, will we?

Doug hoped eventually to take on all the urgent and complex problems of humanity, dealing with them in parallel he saw as the true and final challenge.

Could he have done it somehow: given us exalted, radical tools for optimization and agreement, in this urgent complex world of hurt and hatred?

We’ll never know, will we.

But who better should have had the chance to try? To quote Joan of Arc, from Shaw’s play about her:

“When will the world be ready to receive its saints?” I think we know the answer — when they are dead, pasteurized and homogenized and simplified into stereotypes, and the true depth and integrity of their ideas and initiatives are forgotten. But the urgent and complex problems of mankind have only grown more urgent and more complex.

It sure looks like humanity is circling the drain. To quote the great poet Walt Kelly:

“The gentle journey jolts to stop. The drifting dream is done. The long-gone goblins loom ahead– The deadly, that we thought were dead, Are waiting, every one.”

And here we twiddle in a world of computer glitz, as the winds rise, and the seas rise, and the debts rise, and the terrorists rise, and the nukes tick.

So I don’t just feel like I’ve lost my best friend.

I feel like I’ve lost my best planet.

Conclusion

In this article, I share the visions of Douglas Engelbart, Ted Nelson, and Alan Kay, computer pioneers and thinkers in the 1960s, and the current state of these visions. As I mentioned in My Vision: The Context and My Vision: A New City, I think these pioneers came up with many very profound ideas. Some of them were difficult to implement in the past but are now becoming feasible.

Based on my research on the works of these pioneers and contemporary technological developments, starting from the next article, I will start answering the following question: What kind of Internet am I trying to build? What is my strategy to build this Internet? Where I want this new Internet to take the future of humanity?

這篇文章是由 Heptabase 的共同創辦人詹雨安於 2021 年 4 月 26 日發布,當時距離 Heptabase 推出早期 Alpha 版本還有一個月。

前言

在 My Vision: The Context 這篇文章當中,我提到我未來十年的願景是「將整個世界知識與技術增長的速度優化到理論極限。」為了達成這個願景,我希望打造一個真正普及世界的、我所重新設計過的開放超文本系統(Open Hyperdocument System)。而在 My Vision: A New City 這篇文章當中,我則提到我希望以這個系統為基礎,建立新一代的、帶有某種雄心壯志的網際網路,用它來賦予全人類新的力量。

在具體談這個新一代網際網路上的開放超文本系統是什麼之前,今天這篇文章我想先談一下在個人電腦、物件導向程式語言(Object-oriented Programming)、圖像化介面(Graphical User Interface)、乙太網路(Ethernet)等技術都尚未被發明時,活躍於 1960 年代的計算機先驅和思想家們對計算機的未來所懷抱的願景。

Douglas Engelbart: 強化人類集體智能

Douglas Engelbart 是一名來自 UC Berkeley 的電子工程博士。他在獲得博士學位後兩年,來到 Stanford 研究中心(Stanford Research Institute, SRI),從事的研究主題是「強化人類智�能」。之所以選擇從事這方面的研究,是因為早在 1950 年代,年輕的 Engelbart 就已經觀察到了三件事實:

第一,歷史上所有複雜的問題,都是由一群成年人合力解決的。

第二,當今人類社會所面臨的問題的複雜性和緊迫性正在以史無前例的速度增長。

第三,人類單憑天生具備的基礎能力可以做到的事情非常有限。每當我們想要理解複雜的情況、解決複雜的問題時,總是得引入其他手段來強化我們的基礎能力。這些手段可以分成四大類:人造工具、語言、方法論和訓練。

根據這三件事實,Engelbart 認為,如果我們認真想解決這些比以往都還要複雜且緊迫的問題,勢必得強化人類集體合作解決問題的能力,這個需要被強化的能力就叫集體智能(Collective Intelligence)。而若想強化人類的集體智能,最有效的方法就是將「一群受過訓練的人們,與他們所使用的工具、語言和方法論」視為一個完整的系統,並想辦法優化這個系統整體的運轉效率。

在做這樣的系統性思考時,Engelbart 發現,每當人類社會的規則(例:語言、思維模式、行為模式、教育、做事方法、組織結構、普世價值觀)發生改變時,我們就會產生新的需求,並且為了滿足這些需求而開始製造新的工具;而每當舊的工具被淘汰、新的工具被普及時,這些新的工具又會反過來影響人類社會的規則。這樣的想法其實跟歷史學家 Yuval Harari 在 Homo Deus 一書中提出的觀點非常類似:

為什麼馬克思和列寧成功,洪秀全和馬赫迪失敗?並不是社會人文主義的哲學優於伊斯蘭教或基督教神學,而是馬克思和列寧更努力去理解當代的科技和經濟現實,他們並不會把時間耗費在細讀古代文本、或沉迷在先知的預言中。他們研究蒸汽機如何運作、��煤礦如何經營、鐵路如何塑造經濟,以及電力如何影響政治。沒有電力、鐵路、廣播,就不會有共產主義,因為共產主義需要將資訊及資源集中在一個樞紐。要能達到馬克思所稱「各盡所能、各取所需」的理想,就必須讓距離遙遠的各種產品,都能夠方便蒐集及分配,而且整個國家也要能夠監控和協調國內所有的活動。

社會主義在一百年前是非常先進的,卻沒能跟上新科技的發展。前蘇聯領導人布里茲涅夫和前古巴總統卡斯楚,兩人堅守著馬克思和列寧在蒸汽時代構思的想法,未能理解電腦和生物科技的力量。反觀自由主義,適應資訊時代的能力高出許多。這也就能夠部分解釋,為何赫魯雪夫在 1956 年的預言從未實現,為何最後竟然是自由主義的資本家埋葬了馬克思主義。如果馬克思重回人世,他可能會勸那些已寥寥可數的信眾,少花點時間讀《資本論》、多花點時間研究網際網路和人類基因組。

在領悟到「人類」和「工具」之間存在著相互演化的關係後,Engelbart 對工具的意義產生了新的認知:我們在創造一個工具時,不能只思考這個工具能做到什麼,更要思考這個工具對人類社會的規則可能會造成什麼樣的影響。也因此,作為一個懷抱著願景的工具製造者,Engelbart 一直在想的就是:究竟要打造什麼樣的工具,才能幫助人類社會演化出新的運轉規則,並讓新的工具與新的規則完美配合,進而強化全人類的集體智能?

針對這個問題,Engelbart 最終給出了他的答案:我們需要一種高效的「動態知識庫」(Dynamic Knowledge Repositories�),能隨時隨地將一個群體所擁有的最新知識即時更新並快速整合起來供人使用。這種動態知識庫在過去的紙張時代是不可能被做出來的,因為紙張作為一種靜態的實體媒介存在著物理上的限制,不論是即時更新還是快速整合都難以做到。然而在 1960 年代,計算機科技正在起飛,這項新科技的出現使得動態知識庫的創建變得可能。

Engelbart 的願景和事業在 1968 年 12 月 9 日來到了高峰。當時他和他在 SRI 的團隊演示了他們打造的階段性原型:NLS 系統(oNLine System)。這次的演示中,視窗(windows)、超文本(hypertext)、電腦圖像(graphics)、命令行(command input)、視訊會議(video conferencing)、滑鼠(computer mouse)、文字處理(word processing)、動態檔案連結(dynamic file linking)、版本控制(revision control)、實時協作編輯器(collaborative real-time editor)等在當時前所未見的全新技術首次出現在世人面前,震撼了整個計算機的圈子。NLS 系統被視為當代所有電腦科技最早期的雛形,而這次演示也被後人稱為「所有演示之母」(The Mother of All Demos)。

然而在 1968 年,NLS 系統的製造成本是百萬美元的規模,如此昂貴的價格根本不可能普及世界。另一方面,Engelbart 想要打造的動態知識庫是建基在能即時協作、彼此相連的「電腦網路」之上,但是在那個集權思想備受排斥的年代,年輕的工程師們對於「讓其他人透過網路進到自己的電腦裡面活動」的想法充滿疑慮。這些原因使得 Engelbart 團隊中許多核心工程師在 1970 年前後��陸陸續續地離開 SRI,轉而前往 Xerox PARC 打造「個人電腦」。

越戰結束後,由於來自 ARPA 和 NASA 的資金大幅減少,再加上 SRI 的管理層沒有能力理解 Engelbart 在當時太過超前的願景,導致他的事業開始走下坡,最終在 1976 年被炒了魷魚。在沒有資源和人力的情況下,Engelbart 的才能在很長一段時間裡遭到埋沒。要一直等到 1990 年代,人們才重新意識到 Engelbart 對於整個計算機學界和產業的貢獻。

這些年以來,Engelbart 仍不斷地在提倡他對於強化人類集體智能的願景,並提出了「開放超文本系統」(Open Hyperdocument System)的設計。開放超文本系統是他多年研究的集大成之作,當年的 NLS 系統只能算是開放超文本系統的早期原型。在 Engelbart 的願景中,網路上有著許多大大小小的動態知識庫,而所有的動態知識庫和圍繞在其周圍的工具,底層都使用著同一套開放超文本系統的規範。然而,從開放超文本系統的設計被提出至今,它都仍然沒有被實現在我們今天的網際網路裡頭。

Ted Nelson: 文學機器

Ted Nelson 出身於文學世家,有個曾獲艾美獎的導演父親和曾獲奧斯卡獎的演員母親。與工程背景出身的 Engelbart 不同,Nelson 大學的主修是哲學,畢業後則在 Harvard 取得社會學碩士的學位。在攻讀碩士時,Nelson 修了一門跟機器語言與組譯器有關的課程,意識到計算機並不只是一個數學機器,也不只是一個工程機器,而是一個可以做任何事情的通用機器。從那時起他便逐漸意�識到,這台機器很可能會改變人類對於「寫作」的認知。

一直以來,Nelson 都覺得線性的寫作是思想的枷鎖。他不斷地指出,存在於大腦中的思想是許多不同想法相互連結而形成的網狀結構。寫作的目的,應當是將這些想法相互連結的「真實結構」呈現給讀者。然而,線性寫作存在三個主要問題:

第一,你得強行地將這些想法以特定的先後順序呈現出來。

第二,你得做出取捨,將那些在當下的先後順序之下顯得突兀的想法從文章中捨棄。

第三,針對不同類型的讀者,同樣的內容其實可以有很多不同的講述結構。理想的寫作應當將內容與結構分離。作者應當有一個統一的「內容庫」,針對不同類型的讀者,作者可以將存在於這個內容庫的內容,使用不同的講述結構寫成不同的文章,這些文章可被視為同樣內容的不同版本,在同一個時空中平行存在。

為了解決這些線性寫作的問題,Nelson 提出了超文字(hypertext)的概念,意指「可被自由使用於非線性寫作的文字」。Nelson 指出,超文字其實早就存在於我們日常生活當中。不論是你貼在書本裡的便利貼筆記、在報紙角落寫的註解、在論文最後附上的參考資料、在雜誌中不同格子裡塞入的內容,這些通通都是超文字。如今人類有了計算機,這將會是史上最適合實作超文字的媒介,而這個新的媒介將會顛覆人類的出版產業。

Nelson 的願景,是使用計算機科技,打造一個以超文字為基礎,開放給全人類使用的全球電子出版數位儲存庫(digital repository scheme for world-wide electronic publishing),任何人都可以貢獻新的內容到這個數位儲存庫中。同時,對於這個數位儲存庫中的任何內容,你只要支付內容原作者一小筆費用,都可以自由使用,以它們為原料來創作你的作品��。同一份內容可以被修改成不同的版本、嵌入(transclusion)在不同的文章裡頭;你亦可以在不同的內容之間建立超連結(hyperlink)。更重要的是,所有這些嵌入、超連結的關係都能被雙向保存,你在看任何內容時,都能找到所有跟這個內容有相關性的其他內容。

為了達成這個願景,Ted Nelson 在 1960 年代開始了 Project Xanadu 這個專案。然而,即便 Project Xanadu 的團隊對於計算機的潛力具備深刻的洞見,他們的想法在那個時代仍然太過超前,當時的科技水平還不足以將 Nelson 的願景化為現實。同時,Project Xanadu 亂無章法的設計流程、糟糕的管理能力、以及對市場需求的輕忽長期以來都為人詬病,這使得他們一直都沒有將任何產品放到市場上驗證。最後四十年過去了,Project Xanadu 迎向失敗的終點,變成科技史上歷時最久的 Vaporware。而在市場中勝出的,是 Tim Berners-Lee 以 HTML 語言(HyperText Markup Language)和 HTTP 協議(HyperText Transfer Protocol)為主體設計的 World Wide Web。

Alan Kay: 動態媒介

Alan Kay 出生於一個很特別的家庭,他的父親是設計義肢的生理學家,母親則是音樂家。他大學主修分子生物學、副修數學,同時也是一個職業的爵士吉他手。大學畢業後,Kay 在 1968 年取得電機碩士學位、在 1969 年取得計算機博士學位。也正是在研究生階段,他見證了 Douglas Engelbart 的「所有演示之母」,受到了非常深刻的啟發。在 1970 年,Kay 加入 Xerox PARC 打造個人電腦,為個人電腦產業的日後發展奠定了技術基礎。

對 Kay 來說,科學、藝術和科技本質上並無不同,都是「形式」(form)的一種。三者唯一的差別,就是批判這些形式的標準稍有不同。科學的形式是由自然所批判的,一個科學形式的好壞取決於它是否能幫助我們更暸解自然的運作原理、讓無形的東西變得可見。舉例來說,我們無法看到電磁波,但是我們能用數學形式來探究電磁波的原理。相較之下,藝術的形式是由人類所批判的,一個藝術形式的好壞取決於人類的主觀判斷,每個人都可以用自己的方式去解讀藝術形式的意義並給予評價。科技的形式則很特別,它是科學形式和藝術形式的混合體:好的科技既要符合自然的原理,也要能幫助人類更好的生活。

在接觸到計算機時,Kay 意識到,這項新科技真正強大之處在於它能夠快速且即時地模擬任意的描述。計算機不只可以執行運算,還可以給予即時的反饋,這些都是紙張做不到的事情。這樣的動態性質,使 Kay 堅信計算機有潛力被打造成所有媒介的「元媒介」(metamedium)。要真正理解 Alan Kay 的想法,就不得不提起現代人機互動領域專家 Bret Victor。

Victor 是人機互動研究機構 Dynamicland 的首席研究員,而 Dynamicland 的前身是 Victor 和 Kay 在 2014 年共同創辦的 CDG 實驗室(Communications Design Group)。Victor 指出,任何的想法(idea)要被表達出來,都必須使用表達形式(representation),而我們常見的表達形式包括肢體語言、口語、文字、數學符號、樂譜、視覺圖像。一個表達形式的功用,在於它能幫助我們思考一些在使用其他表達形式時無法思考的想法。舉例來說,古代哲學家主要是用「口語」和「文字」這兩種表達形式來思考世界萬物背後的物理原理,但是現代的科學家比古代哲學家強的地方,就在於我們大量地使用「數學符號」這種新的表達形式來研究物理。很多我們對物理的理論,都是只有在使用數學符號時才有可能被發掘出來的。同樣地,當年費曼之所以會發明「費曼圖」這種新的表達形式,也是因為普通的數學符號無法清楚地表達他想表達的量子場論觀念。

要產生強大的想法,首先需要的是強大的表達形式。而要產生強大的表達形式,需要的就是強大的媒介。口語這個表達形式的媒介是空氣,文字這個表達形式的媒介則是紙。一個媒介要有用,需要符合兩個特性:首先,這個媒介要能乘載有用的表達形式。再者,人類要有辦法使用自身的能力(capabilities)在這個媒介上創造出有用的表達形式。空氣是一個媒介,不只是因為空氣可以乘載不同頻率的聲波,更是因為人類能用聲帶去創造不同頻率的聲波;紙張是一個媒介,不只是因為紙張可以承載文字符號和視覺圖像,更是因為人類能用手指去畫出這些文字符號和圖像。

媒介存在的意義是「讓人類使用自身的能力去創造思想的表達形式」,而媒介的好與壞,則取決於它能乘載的表達形式是否足夠豐富,以及創造這些表達形式的過程是否足夠簡單。這正是當年 Kay 在看到計算機時不斷地在思考的事情。Kay 在 Xerox PARC 領導的 Smalltalk Project,其目的就是把計算機打造成一個可以被任何領域的人使用的媒介。Smalltalk 團隊當時主要的聚焦就是打造出以下兩個東��西:

- 可以被人腦直覺地理解和使用,亦可以被電腦有效地解讀的程式語言。

- 可以讓人跟電腦有效互動的用戶介面。

這兩個東西最終就變成了我們熟知的物件導向程式語言(Object-oriented Programming)和圖像化介面(Graphical User Interface)。

在發明物件導向程式語言時,Smalltalk 團隊的設計原則是:任何人都應當能使用這套程式語言,在計算機上創造有用的表達形式,並定義清楚這些表達形式需要遵守的規則。舉例來說,一個作曲家可以使用物件導向程式語言來寫一個應用程式。在寫程式的過程中,他可以將音符(Note)、旋律(Melody)、樂譜(Score)、音色(Timbre)等概念抽象成不同的「類」(Class),並定義清楚這些「類」的屬性和規則(例:將音符對應到的聲波頻率存在浮點數裡頭),以及這些「類」彼此之間的關係(例:一段旋律是一堆音符的時間序列組合)。

當應用程式開始執行時,計算機會將這些「類」實體化成「物件」(Object),而這些物件會在應用程式裡頭遵循著事先定義好的規則互相溝通,並根據溝通中獲得的資訊發生狀態的改變。作曲家可以透過圖像化介面看到被他圖像化的物件,也可以透過喇叭聽到被他聲音化的物件。此時,這個作曲家創造的這個應用程式就是一種新的、可以用來表達音樂想法的媒介,而應用程式裡頭的這些被他看到和聽到的物件則是他在這個媒介中所使用的表達形式。作曲家可以在修改幾段旋律後,馬上就聽到曲子修改後的樣子;他可以透過添加新的程式將音樂視覺化,用眼睛去理解自己創作的音樂;他亦可以馬上聽到同樣的樂譜在不同樂器的演奏下聽起來的樣子。

表達形式的存在意義,是幫助我們暸解一個我們希望暸解的系統。在過去,我們習慣使用文字和口語去理解人類社會、使用數學符號和圖像去理解宇宙萬物。如今物件導向程式語言被發明出來了,這使得任何人都可以使用這套語言在計算機上發明新的媒介,並在這個媒介上創造不同的表達形式來模擬他希望理解的那個系統。

建築師可以模擬三維空間的建築設計,醫生可以模擬藥物的反應系統,物理學家可以模擬被特定物理定律支配的假想世界,數學家可以模擬數學公式的抽象結構,商務人士可以模擬不同的商業決策,政府官員可以模擬新的政策對社會造成的影響。

除了 Smalltalk 以外,Kay 在計算機領域最廣為人所知的想法,就是他在遇到了 MIT 的數學家和計算機科學家 Seymour Papert 後畫的 Dynabook。Papert 深受發展心理學家 Jean Piaget 的影響,認為「從玩中學」是最強大也最自然的學習方式。也因此他設計了 Logo 程式語言,讓兒童能透過這種程式語言來學習數學概念。在看到 Papert 的成果後,Kay 深受啟發,於是有了 Dynabook 的想法:小孩子可以在 Dynabook 上使用特殊的程式語言製作太空遊戲,進而在不知不覺中習得物理的概念。

其實不管是 Kay 還是 Papert,你會發現他們打造的東西都有著同樣的核心想法:計算機將重新定義「學習」這件事。學習不再是被動地接受知識,而是主動地去模擬不同的系統,並在這個過程中,對於你在模擬的系統產生更加深刻的理解。21 世紀的教育,應該是讓孩子們能輕易地在計算機上模擬不同的系統,進而習得有關這些系統的知識,以及對這些知識的真正理解。

Kay 認為,上一次人類社會發生思想上的質變,是在印刷革命(printing revolution)的時候。我們所知的偉大科學、哲學、文學著作能出現,是因為印刷術的普及使得所有人都能使用紙張這個媒介來思考、學習知識。如今我們有了計算機這項新的媒介,這將會引領人類社會再一次地發生思想上的質變,計算機革命(computer revolution)將會為我們帶來比紙張更為強大的思考和學習方式。

未完成的願景

Douglas Engelbart 希望用計算機來幫助成年人合作、集體解決困難的問題;Ted Nelson 希望用計算機來幫助創作者打造一個開放(openness)且多元(pluralism)的出版世界;Alan Kay 則希望用計算機幫助人類創造更強大的表達形式來模擬與理解不同的系統,進而掀起人類史上第二次的思想和教育革命。這些計算機史上的巨擘,在 1960 年代有著遠大的願景,然而受限於當時的時空條件與技術限制,這些願景至今仍未被達成。

我們先來看 Alan Kay。

Alan Kay 一直在不斷強調的,也是現在 Bret Victor 的設計哲學中的核心理念,就是計算機是一種「動態媒介」(dynamic medium)。計算機的本質並不是鍵盤、滑鼠和螢幕,而是它計算(computational)和響應(responsive)的能力,這些能力是紙張這種靜態媒介所不具備的。我們應該要善用這種動態媒介,去創造只能存在這種媒介上的動態表達形式,思考只能在這種媒介上才能思考的想法。

然而,Kay 認為世界上大部分的人仍活在上一個範式(paradigm)裡頭,目前人類大多還是在使用計算機去模擬舊的媒介、在上面創造舊的表達形式。PDF 是一種對紙張的模擬、影片播放器是一種對電視的模擬、音樂播放器是一種對唱片機的模擬。我們使用新的媒介做舊的媒介就能做到的事情,有時候還沒有做得比舊的媒介還要好。這就好比 15 世紀的神職人員看到印刷術時只想得到用它來印聖經一樣,現代人雖然有了計算機,但卻尚未解放出這個動態媒介具備的所有潛力。

另一方面,Kay 對於現代人使用計算機的方式也有很大的不滿。很多人會把 Apple 的 iPad 跟 Kay 的 Dynabook 做比較,然而對 Kay 來說,這兩者是完全不同的產品。電腦能被做成平板,是在積體電路的摩爾定律之下遲早會發生的事情。Dynabook 的重點並不是平板,而是它揭示了計算機最重要的用途:幫助我們思考、創造和學習。當年 Steve Jobs 深受 Kay 的啟發,希望把 Apple 的產品打造成「大腦的腳踏車」(bicycle for the mind);然而多年過去,現代大多數人在使用計算機時,都還是把它當作一個消費設備在使用。

接著我們來看 Ted Nelson。

Ted Nelson 可以說是當今這個世界上對 World Wide Web 最不滿的人。在 Ted Nelson on the Web as Hypertext 和 Ted Nelson’s Computer Paradigm, Expressed as One-Liners 這兩篇文章中,我們可以看到他對對我們今天的 World Wide Web 的評語:

今天噩夢般的新世界被「網站管理員」控制著,這些技術宅們只懂得將注意力放在網路中無限湧入的、有著嵌入格式的字母湯,卻無法理解文本問題的細微巧妙之處。XML 帶來的不是進步,而是一坨漢堡般的階層架構。所有的一切、所有的一切都被他們強制要求放到階層的模板裡頭!「語義網」意味著這些科技委員會們將一蹴而就地替�我們定義世界上所有概念的真實結構。

HTML 正是我們試圖阻止的 — — 不斷斷裂的鏈接、只能向外長的鏈接、無法追蹤源頭的引用、沒有版本控制、沒有權限管理。

Markup 不應該被嵌入。階層架構和檔案不應該被包含在文本的內在結構裡頭。鏈接必須是雙向的。網路的所有這些基本錯誤都必須被修復。但是技術宅們卻一直在試圖鎖上身後的門,逼迫我們只能接受一種可能性。

即便 Nelson 厭惡 World Wide Web,但我們必須承認的是,這就是我們現在所處的世界。人們已經在這樣的網路上打造了龐大的生態系,要從根本上去修正這些「基本錯誤」也變得極為困難。也因此,Alan Kay 在 Intertwingled Festival 對 Ted Nelson 的事業做出了以下評價:

古諺說:「在盲人的國度裡,獨眼人是國王。」Robert Heinlein 的版本是:「在盲人的國度裡,獨眼人備受煎熬。」我的版本則是:「在盲人的國度裡,獨眼人掌控一切,雙眼人備受煎熬。」也就是說,我們的文明在很大程度上得歸功於少數雙眼人的洞察力和痛苦。Ted Nelson 是那少數幾個長著兩隻眼睛的人之一。我們虧欠他非常多。

這個雙眼人構思出了一個輝煌的交響音樂,告訴大家如果我們做某件事,生活就會變得多麼深刻且豐富。然而,普通的世界卻將這個輝煌的交響音樂過濾、簡化。最終,我們能聽得到一個撥號音調就已經算幸運了。

盲人看不到他的想法、獨眼人只窺見一瞥,並以為他們已經看見了全部。在我們的時代,如果獨眼人認為這一瞥可以幫助他們賺到錢,某些事情就會發生。為了將產品賣給盲人大眾,獨眼人還��會將瞥見的想法再加以稀釋。這些獨眼人化身為教育家,試著幫助盲人睜開眼睛,這正是科學在為人類做的事情。然而,學習睜開眼睛是一件苦差事,大部分的人、特別是市場上的商人們都對此不感興趣。這非常糟糕,他們不知道像 Ted 這樣的雙眼人要讓獨眼人們看到那一瞥需要費多大的努力。

重要的是,我們需要讓這些雙眼人成為佈道者。Ted 和我們的共同英雄,Douglas Engelbart,在他們的一生中不倦地指出,在這個撥號電話的世界裡,國王不只沒穿衣服,他的手機也無法播送出真正的音樂。

最後我們來看 Douglas Engelbart。

Engelbart 已於 2013 年離開人世。身處在 2020 年的我們,很多人聽都沒聽過 Engelbart 的名字。在知道 Engelbart 的人裡,也有很多人以為他不過是「滑鼠的發明人」、「計算機科技的早期貢獻者」。然而正如 Alan Kay 所說的,Douglas Engelbart 和 Ted Nelson 都是雙眼人,如果我們這些盲人和獨眼人要知道雙眼人們對世界真正的貢獻,就必須拋開我們的成見,用盡全力地去理解。

Engelbart 有個願景,而我們現在的世界離這個願景還有很長的一段路要走。要理解這段路有多長,最好的方式就是去看其他計算機巨擘對 Engelbart 的願景與計算機科技的現況所給出的評價。

在 Engelbart 去世的那天,Bret Victor 發表了一篇名為 A few words on Doug Engelbart 的文章,寫道:

Engelbart 的願景從一開始就是協作。他的願景是讓人們在一個共享的智慧空間中一起工作。他的整個系統都是圍繞著這個意圖去設計的。

我們現在的計算機從頭到尾都是以只有一個用戶為前提去設計的,��簡單地在遠程鏡像一個顯示器並不能化腐朽為神奇地將它變成一個協作環境。

如果你試圖站在今天的系統的角度上,通過尋找共同點的方式來理解他的工作,你將大錯特錯。因為今天的系統沒有包含 Engelbart 的意圖,Engelbart 討厭今天的系統。

Alan Kay 在 How to Invent the Future 的演講,以及在一次接受《FastCompany》雜誌的採訪中,也提到了不少類似的觀點:

*ARPA 研究和發明這些東西背後的全部動力,特別是對像 Engelbart 這樣的人來說,是試圖**為人類發明新的工具和新的媒體,用以以解決那些困住人類的問題。**Engelbart 說:「幾乎所有重要的、會對成年人的世界產生影響的事情,都是由一群成年人共同努力完成的。」**這就是為什麼他的系統是協作的。*現在我們有些人用 Mac,有些人用 Linux,有些人用 Windows。沒有任何一個作業系統的內建設計跟 Engelbart 在 1968 年的系統一樣,能讓你將你看到的任何內容分享給其他人,並讓你跟其他人來回互動、討論這些內容。

我們今天三個主要的作業系統都是基於很老很老的想法。他們甚至沒有使用到 PARC 關於進程之間如何相互協調的想法。然而,因為這些作業系統看起來很像,也因為它們在市場上很普及,所以你會認為它們是正常的。

World Wide Web 的發明者 Tim Berners-Lee 是一位物理學家,如果他不知道牛頓做了什麼,他就會被物理學界掃地出門。然而在發明 World Wide Web 時,他並不知道 Douglas Engelbart 的存在。

也因為這樣,他對 World Wide Web 的概念顯得無比渺小、脆弱和可怕。對於其他不老練的人來說,Tim Berners-Lee 的網路比較簡單易懂,所以 World Wide Web 最終成為了事實上的標準,而我們至今仍然在為此受苦。你知道,HTML 非常糟糕,而大多數人無法看見它的糟糕之處。

看在老天的份上,至少給我們 Engelbart 的成果吧。

最後,關於 Engelbart 的最佳總結,我想是 2013 年 Ted Nelson 在一場致敬 Engelbart 的紀念活動中所給的悼詞:

真正值得我們哀悼的是 Doug 偉大夢想和願景的灰燼。當我們在字體的化妝舞會上跳舞時,這些字體將 Doug 關於結構和協作的想法扔到了角落。

別誤會我的意思,那些為我們提供字體的人也是理想主義者。他們在用他們自己的方式來實踐理想 — — 他們只是對人類的潛能沒有很高的期待。

我以前對人類的潛能有很高的期待。但沒有人能像 Douglas Carl Engelbart 那樣,對人類潛能有如此高瞻遠矚的看法 — — 他給了我們與他一起翱翔的翅膀,儘管他飛在最前頭,只有很少的人能看得到他。

就像伊卡洛斯一樣,他試圖飛得太遠太快,飛到翅膀都快融化了。翅膀在 1968 年那場偉大的演示之後開始融化。他的團隊分散到其他地方尋找財富;他被分派到一個人工智慧的部門,在那裡他的真正智慧被扼殺了。

不久以後,Augmentation Research Center 就消失了,被一家飛機公司打發走了。

在接下來的三十年,他被邊緣化了。他不斷地嘗試找回支持,然而情況就像好萊塢的「發展地獄」一般從未好轉。

讓我們不要忘記,Douglas Engelbart 被 ARPA 拋棄,Douglas Engelbart 被 SRI 拋棄,Douglas Engelbart 被 Xerox PARC 冷落,在他的餘生里,他沒有機會帶領我們走得更遠。

但對 Doug 來說,那場偉大的演示僅僅是個開始。

那個定義了我們現在世界的偉大演示,只是他無盡棋盤中的第一個格子。他想打造的那個偉大的實驗空間、那個能讓人們一起分享、合作、理解的工作場所,只有他才有辦法想像。

正如我們只能猜測 John Kennedy 會做什麼一樣,我們也只能猜測如果 Doug Engelbart 沒有在巔峰期被裁掉的話,他會做出什麼來。

也許他腦中想像的動態知識庫在現實世界的企業中並不可行。

也許,在這個充滿組織政治、勾心鬥角、暗箭傷人的骯臟世界裡頭,他加速人類合作的想法只是一個白日夢。

他當然很天真!

Gandhi 和 Martin Luther King 假裝天真,但 Doug 才是貨真價值的 — — 他是一個發光的、天真無邪的人,他能做那些世故的人不能、不願、也不敢做的事。

但是,他在 Augmentation Research Center 的那幾年裡,即使是在人們對他處處針對的時候,這個天真的人也取得了令人眼花繚亂的成就。

在這光環之下,他還會有其他偉大的發明嗎?

我們永遠都不會知道,不是嗎?

Doug 希望最終能夠處理人類世界中所有緊迫且複雜的問題,他把處理這些問題視為真正的也是最後的挑戰。

在這個充滿��傷害和仇恨的緊迫而複雜的世界裡,他真的能為我們帶來那些崇高的、能幫助我們優化集體智能和達成共識的工具嗎?我們永遠也不會知道,不是嗎?

但還有誰比他更有機會去嘗試呢?

引用蕭伯納的《聖女貞德》劇中的一句話:「這個世界什麼時候才能準備好接納它的聖徒呢?」

我想我們知道答案 — — 當他們死去,被人們簡化成刻板印象,當他們真正深刻且完整的想法和倡議被遺忘的時候。

然而,人類世界中緊迫和複雜的問題只會變得更加緊迫和複雜。

很明顯地,人類看起來正在被耗盡。引用偉大詩人 Walt Kelly 的話:

「溫柔的旅程顛簸著停了下來。漂泊的夢已成定局。消失已久的哥布林正在靠近 — — 那些我們以為已經死去的人,每一個都在等著我們。」

而在這裡,我們仍在一個計算機浮華的世界裡遊蕩。風吹起,海平面上升,債務上升,恐怖分子崛起,核武器滴答作響。

所以我不只覺得我失去了我最好的朋友。

我覺得我失去了我最好的星球。

結語

在這篇文章中,我分享了 Douglas Engelbart、Ted Nelson 和 Alan Kay 等活躍於 1960 年代的計算機先驅的願景,以及這些願景的現況。正如同我在 My Vision: The Context 和 My Vision: A New City 這兩篇文章中所提及的,我認為這些先驅們提出了許多非常深刻的想法,其中有些想法在過去難以實踐,但如今已經變得可行。

所以從下一篇文章開始,我將開始具體的解說,在長期研究了這些先驅的著作以及當代的科技發展後,我希望能打造出什麼樣的��網際網路、我為了打造這樣的網路制定了什麼樣的策略,以及我希望這個新的網路能將人類的未來帶往何處。